PERFORMANCE TUNING MOBILE API – NETWORK

A mobile application by default has a network component. The portion from the phone to the Ethernet card of the API servers. Who has not seen the US commercials “can you hear me now?” Canadian Wireless Service providers spend significant effort to plan their network coverage, identify poor performance, do capacity planning and ensure signal coverage. This includes crowd sourcing, BI, using tools and even driving around. Wireless networks are however not static and everything from the number of tree leaves, to time of day, affect the signal strength and capacity for a given location (Wireless bandwidth is limited and shared per cell frequency and cell coverage). Add to this nearly 10,000,000 square km of geography we have in Canada and you can understand the enormity of testing the network.

Network can however be broken into 4 parts

- The Wireless Over Air portion (phone to first tower)

- The Backhaul portion or the wireless providers network.

- Your ISP portion

- Your Internal Network Portion

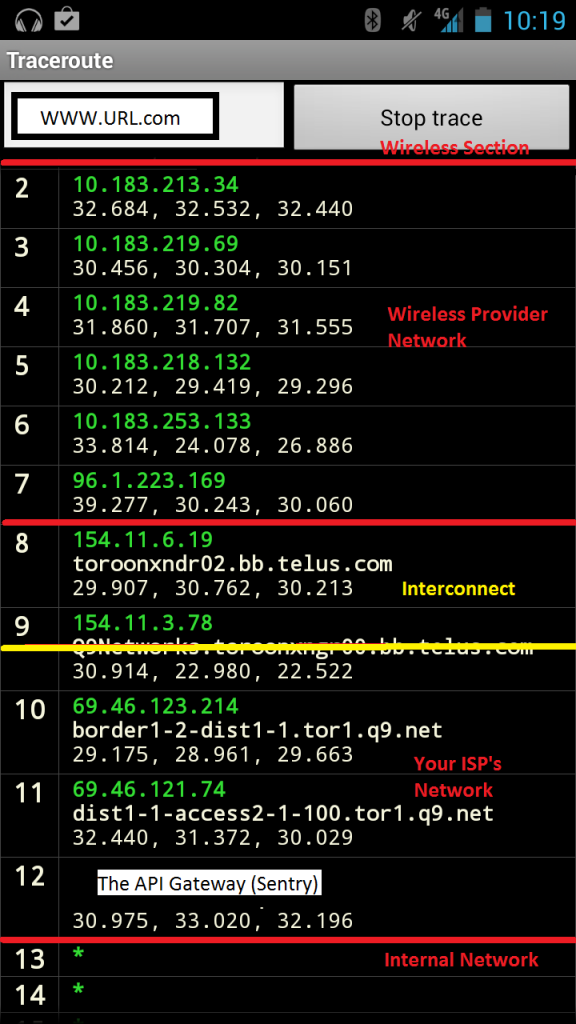

I took the following is trace route from my phone on a Toronto Subway Station. Between hops 1 and position 2 is the wireless Over the Air portion. Hops 2-7 are through my wireless Service Providers Backhaul. Hops 8 and 9 are My wireless service providers link to the interconnect or Canadian Torix marked in yellow. Hops 10-12 are the ISP of the API servers I was trace routing to. This usually ends with a firewall, proxy or API gateway. This hides their internal network, which would be 13 onward. Often the API server is near the edge, but the Enablers could be some number of hops behind that 13.

I took the following is trace route from my phone on a Toronto Subway Station. Between hops 1 and position 2 is the wireless Over the Air portion. Hops 2-7 are through my wireless Service Providers Backhaul. Hops 8 and 9 are My wireless service providers link to the interconnect or Canadian Torix marked in yellow. Hops 10-12 are the ISP of the API servers I was trace routing to. This usually ends with a firewall, proxy or API gateway. This hides their internal network, which would be 13 onward. Often the API server is near the edge, but the Enablers could be some number of hops behind that 13.

Although Trace route or Ping will give some idea of the number of hops and latency, it usually based on a very small packet size and therefore not representative of your application traffic. This trace route was also is sent from only for 1 of possibly billions of locations in Canada – a metro core close to Torix. The larger the API responses are, the more they are affected by less than ideal network connectivity. If your application request and response messages are in megabytes, network conditions becomes critical vs an application with smaller request or response message sizes.

Network = User Experience – ( Client + Enabler + API)

Now from the Client Post, we know the effect Client has on performance. But how do to nullify the effects of the API and the Enablers to determine the effect Network has on the application? Especially given that at least a few given locations, and different wireless service providers should be tested to establish additional points of reference. Do we need to hire a guy to walk around saying, “can you hear me now?” or use your application in bazaar places?

SOAPSonar offers Functional, AND Performance testing in a single tool, utilizing the same test cases, yet running them in different modes. SOAPSonar tests the API directly, without the client and can load test these services via the use of virtual clients. After selecting your automated data-sources, success criteria etc and testing the functionality of your new REST application API using SOAPSonar, tester can place SOAPSonar on the same network segment as the API and run the tests again in performance mode. If you wish to understand the impact of load, have SOAPSonar user virtual clients or TPS to load the API, while doing the performance test. This provides a baseline for the effect that API and Enablers have on User experience, while essentially removing the client portion without network.

SOAPSonar Server also supports distributed load agents, ability to develop and trigger a test from a central location from remotely placed machine. Data sticks from different wireless network providers, allows for a comparison to include different wireless networks and location. Each of these remote remote Agents can be assigned 1 or more virtual users in SOAPSonar and linked via IP address. The same test done in the previous paragraph should be run, including utilizing these remotely placed agents and their virtual users. The test results for these remote agents differ from the local test above. This is because the exact same test now includes Network for those remote agents over the network. The report on performance of each different agent under load is includes the results for the local agent vs. the remote agents. This is the effect network and location has on your application.

Understanding expected behavior and isolating the other variables, does not assure consistent network service. Nor is there much you can do about some of it. The wireless over air portion and the back-haul portion are not under your control, but that of the user’s wireless provider. Your ISP portion and your Internal Network Portion however are under your design and multi-homing to more than one ISP, the placement of your datacenters etc can improve network performance. The same process is used for testing the impact and network design for moving to cloud based services.

A better example of business requirement would be “ the application will refresh in 1 second over a 4G network of Bell, TELUS, and Rogers in downtown Toronto, Vancouver, Montreal and Halifax in 80% of the test cases. Another could be the aggregate message size on any single screen will not be more than abc kb. This design would ensure network would have less of an effect, by although could increase the number of services and screens needed on the client.